Configure High Availability and Workload Isolation

On this page

- Considerations

- In Atlas, go to the Clusters page for your project.

- Click Connect.

- Electable Nodes for High Availability

- Add Electable Nodes

- Remove Electable Nodes

- Change Electable Nodes to Read-Only Nodes

- Improve the Availability of a Cluster

- Change the Highest Priority Provider or Region

- Read-Only Nodes for Optimal Local Reads

- Add Read-Only Nodes

- Remove Read-Only Nodes

- Change Workload Purpose of Nodes

- Analytics Nodes for Workload Isolation

- Add Analytics Nodes

- Select a Cluster Tier for Your Analytics Nodes

- Remove Analytics Nodes

- Search Nodes for Workload Isolation

- Considerations

- Add Search Nodes

- Remove Search Nodes

- Limitations

Tip

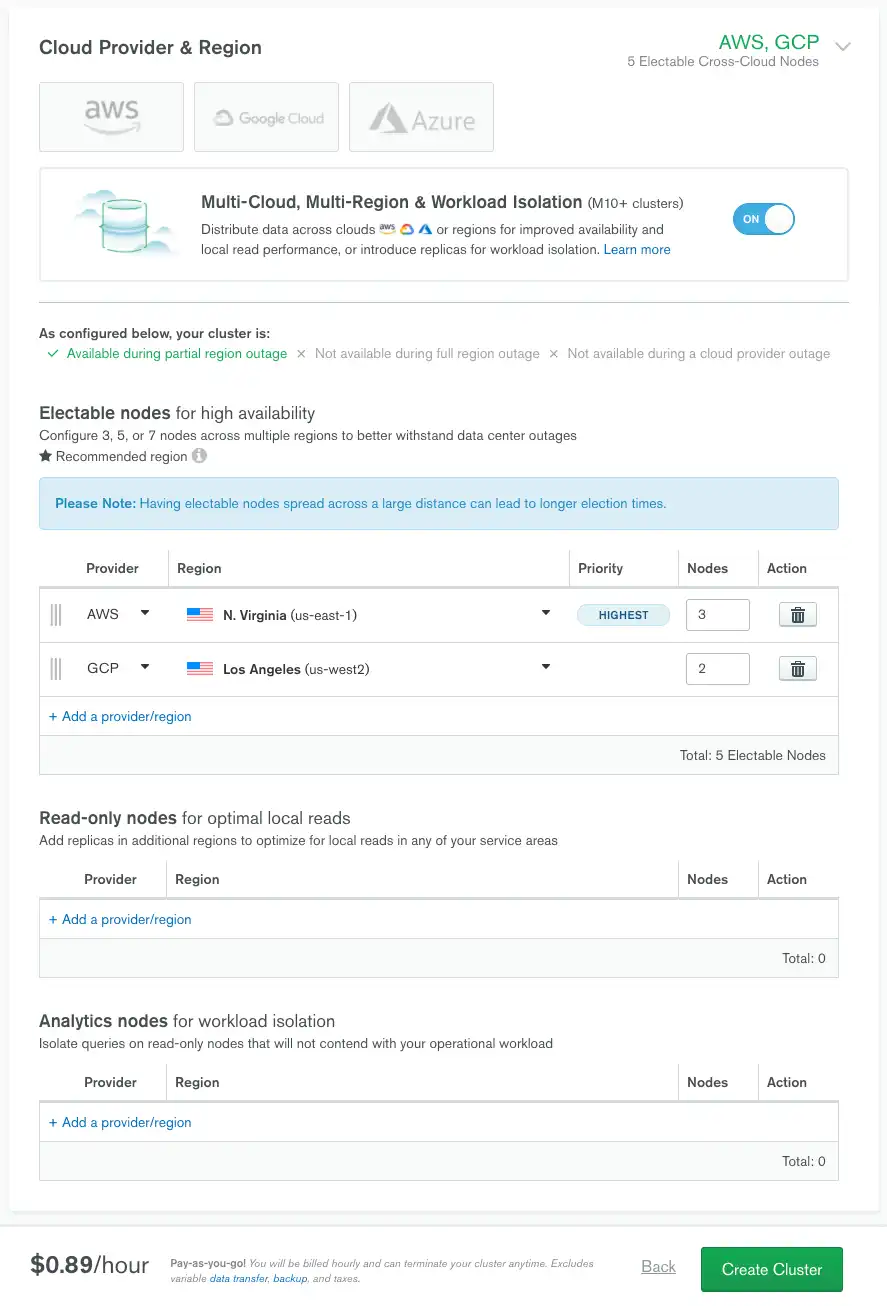

You can create multi-cloud MongoDB deployments in Atlas using any combination of cloud providers: AWS, Azure, and Google Cloud.

You can set the nodes in your MongoDB deployment to use different:

Cloud providers

Geographic regions

Workload priorities

Replication configurations

Using these options allows you to improve the availability and workload balancing of your cluster.

To configure node-specific options for your cluster, toggle Multi-Cloud, Multi-Region & Workload Isolation (M10+ clusters) to On.

A cluster may be hosted in:

Multiple regions within a single cloud provider.

Multiple regions across multiple cloud providers.

As each cloud provider has its own set of regions, multi-cloud clusters are also multi-region clusters.

Considerations

Atlas does not guarantee that host names remain consistent with respect to node types during topology changes.

Example

If you have a cluster named

foo123containing an analytics nodefoo123-shard-00-03-a1b2c.mongodb.net:27017, Atlas does not guarantee that specific host name will continue to refer to an analytics node after a topology change, such as scaling a cluster to modify its number of nodes or regions.In sharded clusters, Atlas distributes the three config server nodes based on the number of electable regions in the cluster. If the cluster has:

Only one electable region, Atlas deploys all three config nodes in that region.

Two electable regions, Atlas deploys two config nodes in the highest priority region and one config node in the second highest priority region.

Three or more electable regions, Atlas deploys one config node in each of the three highest priority regions.

Having a large number of regions or having nodes spread across long distances may lead to long election times or replication lag.

A cluster change that adds, removes, or modifies voting members will take longer, since Atlas adds, removes, or modifies voting members one at a time on a rolling basis.

Clusters can span regions and cloud service providers. The total number of nodes in clusters spanning across regions has a specific constraint on a per-project basis.

Atlas limits the total number of nodes in other regions in one project to a total of 40. This total excludes:

Google Cloud regions communicating with each other

Free clusters or shared clusters

Serverless instances

Sharded clusters include additional nodes. The electable nodes on the dedicated Config Server Replica Set (CSRS) count towards the total number of allowable nodes. Each sharded cluster has an additional electable node per region as part of the dedicated CSRS. To learn more, see Replica Set Config Servers.

The total number of nodes between any two regions must meet this constraint.

Example

If an Atlas project has nodes in clusters spread across three regions:

30 nodes in Region A

10 nodes in Region B

5 nodes in Region C

You can only add 5 more nodes to Region C because:

If you exclude Region C, Region A + Region B = 40.

If you exclude Region B, Region A + Region C = 35, <= 40.

If you exclude Region A, Region B + Region C = 15, <= 40.

Each combination of regions with the added 5 nodes still meets the per-project constraint:

Region A + B = 40

Region A + C = 40

Region B + C = 20

You can't create a multi-region cluster in a project if it has one or more clusters spanning 40 or more nodes in other regions.

Contact Atlas support for questions or assistance with raising this limit.

Atlas provides built-in custom write concerns for multi-region clusters. Use these write concerns to ensure your write operations propagate to a desired number of regions, thereby ensuring data consistency across your regions. To learn more, see Built-In Custom Write Concerns.

The number of availability zones, zones, or fault domains in a region has no effect on the number of MongoDB nodes Atlas can deploy. MongoDB Atlas clusters are always made of replica sets with a minimum of three MongoDB nodes.

If you use the standard connection string format rather than the DNS seedlist format, removing an entire region from an existing cross-region cluster may result in a new connection string.

To verify the correct connection string after deploying the changes:

1In Atlas, go to the Clusters page for your project.

If it is not already displayed, select the organization that contains your desired project from the Organizations menu in the navigation bar.

If it is not already displayed, select your desired project from the Projects menu in the navigation bar.

If the Clusters page is not already displayed, click Database in the sidebar.

If you plan on creating one or more VPC peering connections on your first

M10+dedicated paid cluster for the selected region or regions, first review the documentation on VPC Peering Connections.

Electable Nodes for High Availability

If you add regions with electable nodes, you:

increase data availability and

reduce the impact of data center outages.

You may set different regions from one cloud provider or choose different cloud providers.

Atlas sets the node in the first row of the Electable nodes table as the Highest Priority region.

Atlas prioritizes nodes in this region for primary eligibility. Other nodes rank in the order that they appear. For more information, see Member Priority.

Each electable node can:

Participate in replica set elections.

Become the primary while the majority of nodes in the replica set remain available.

Add Electable Nodes

You can add electable nodes in one cloud provider and region from the Electable nodes for high availability section.

To add an electable node:

Click Add a provider/region.

Select the cloud provider from the Provider dropdown.

Select the region from the Region dropdown.

When you change the Provider option, the Region changes to a blank option. If you don't select a region, Atlas displays an error when you click Create Cluster.

Specify the desired number of Nodes for the provider and region.

The total number of electable nodes across all providers and regions in the cluster must equal 3, 5, or 7.

Atlas considers regions marked with a as recommended. These regions provide higher availability compared to other regions.

To learn more, see:

Remove Electable Nodes

To remove a node from a region, click the icon to the right side of that region. You cannot remove a node in the Highest Priority region.

To learn more, see Multi-Region Cluster Backups.

Change Electable Nodes to Read-Only Nodes

You can change an electable node to a read-only node by adding a read-only node and removing an electable node at the same time. To learn more, see Change Workload Purpose of Nodes.

Improve the Availability of a Cluster

To improve the redundancy and availability of a cluster, increase the number of electable nodes in that region. Every Atlas cluster has a Highest Priority region. If your cluster spans multiple regions, you can select which cloud provider region should be the Highest Priority.

To prevent loss of availability and performance, consider the following scenarios:

Point of Failure | How to Prevent this Point of Failure |

|---|---|

Cloud Provider | Minimum of one node set in all three cloud providers. More than

one node per region. |

Region | Minimum of one node set in three or more different regions. More

than one node per region. |

Node |

|

Change the Highest Priority Provider or Region

If you change the Highest Priority provider and region in an active multi-region cluster, Atlas selects a new primary node in the provider and region that you specify (assuming that the number of nodes in each provider and region remains the same and nothing else is modified).

Example

If you have an active 5-node cluster with the following configuration:

Nodes | Provider | Region | Priority |

|---|---|---|---|

3 | AWS | us-east-1 | Highest |

2 | Google Cloud | us-west3 |

To make the Google Cloud us-west3 nodes the Highest Priority, drag its row to the top of your cluster's Electable nodes list. After this change, Atlas elects a new PRIMARY in us-west3. Atlas doesn't start an initial sync or re-provision hosts when changing this configuration.

Important

Certain circumstances may delay an election of a new primary.

Example

A sharded cluster with heavy workloads on its primary shard may delay the election. This results in not having all primary nodes in the same region temporarily.

To minimize these risks, avoid modifying your primary region during periods of heavy workload.

Read-Only Nodes for Optimal Local Reads

Use read-only nodes to optimize local reads in the nodes' respective service areas.

Add Read-Only Nodes

You can add read-only nodes from the Read-Only Nodes for Optimal Local Reads section.

To add a read-only node in one cloud provider and region:

Click Add a provider/region.

Select the cloud provider from the Provider dropdown.

Select the region from the Region dropdown.

When you change the Provider option, the Region changes to a blank option. If you don't select a region, Atlas displays an error when you click Create Cluster.

Specify the desired number of Nodes for the provider and region.

Atlas considers regions marked with a as recommended. These regions provide higher availability compared to other regions.

Read-only nodes don't provide high availability because they don't participate in elections. They can't become the primary for their cluster. Read-only nodes have distinct read preference tags that allow you to direct queries to desired regions.

Remove Read-Only Nodes

To remove all read-only nodes in one cloud provider and region, click the icon to the right of that cloud provider and region.

Change Workload Purpose of Nodes

You can change a node's workload purpose by adding and removing nodes at the same time.

Note

You must add and remove the node within the same configuration change to reuse a node. If you remove the node, save the change, then add the node, Atlas provisions a new node instead.

For example, to change a read-only node to an electable node:

Click Review Changes.

Click Apply Changes.

Analytics Nodes for Workload Isolation

Use analytics nodes to isolate queries which you do not wish to contend with your operational workload. Analytics nodes help handle data analysis operations, such as reporting queries from BI Connector for Atlas. Analytics nodes have distinct replica set tags which allow you to direct queries to desired regions.

Click Add a region to select a region in which to deploy analytics nodes. Specify the desired number of Nodes for the region.

Note

The readPreference and readPreferenceTags connection string options are unavailable for the mongo shell. To learn more, see cursor.readPref() and Mongo.setReadPref() instead.

Add Analytics Nodes

You can add analytics nodes from the Analytics nodes for workload isolation section.

To add analytics nodes in one cloud provider and region:

Click Add a provider/region.

Select the cloud provider from the Provider dropdown.

Select the region from the Region dropdown.

When you change the Provider option, the Region changes to a blank option. If you don't select a region, Atlas displays an error when you click Create Cluster.

Specify the desired number of Nodes for the provider and region.

Atlas considers regions marked with a as recommended. These regions provide higher availability compared to other regions.

Analytics nodes don't provide high availability because they don't participate in elections. They can't become the primary for their cluster.

Select a Cluster Tier for Your Analytics Nodes

Your workloads can vary greatly between analytics and operational nodes.

To help manage this issue, for M10+ clusters, you can

select a cluster tier

appropriately sized for your analytics workload. You can select a

cluster tier for your analytics nodes that's larger or smaller than

the cluster tier selected for your electable and read-only nodes

(operational nodes). This functionality helps to ensure that you get

the performance required for your transactional and analytical queries

without over or under provisioning your entire cluster for your

analytical workload.

The following considerations apply to the Analytics Tier tab and analytic nodes:

Important

If you select a cluster tier on the Analytics Tier tab significantly below the cluster tier selected on the Base Tier tab, replication lag might result. The analytics node might fall off the oplog altogether.

If you select a General cluster tier on the Analytics Tier tab and a Low-CPU cluster tier on the Base Tier tab, disk auto-scaling isn't supported for the cluster. Disk auto-scaling also isn't supported if you select a General cluster tier on the Base Tier tab and a Low-CPU cluster tier on the Analytics Tier tab.

Disk size and IOPS must remain the same across all node types.

Storage size must match between the Base Tier tab and Analytics Tier tab. You can set the storage size on the Base Tier tab.

If you want to select the Local NVME SSD class on the Base Tier tab, the Analytics Tier tab must have the same tier level selected.

If a cluster tier appears grayed out, the cluster tier isn't compatible with the disk size of the cluster or the Local NVME SSD class.

A cluster tier selected on the Analytics Tier tab is priced the same as a cluster tier selected on the Base Tier tab. However, when an Analytics Tier is higher or lower than the Base Tier, the price adjusts accordingly on a prorated per-node basis. The pricing appears in the Atlas UI when you create or edit a cluster. To learn more, see, Manage Billing.

After you add your analytics nodes, you can select a cluster tier appropriately sized for your analytics workload.

In the Cluster Tier section, click the Analytics Tier tab.

Select the Cluster Tier.

Remove Analytics Nodes

To remove all analytics nodes in one cloud provider and region, click the icon to the right of that cloud provider and region.

Search Nodes for Workload Isolation

You can set the nodes in your cluster to run only the Atlas Search

mongot process. When you run the Atlas Search mongot process

separately, you improve the availability and workload balancing of the

mongot process. To learn more, see Search Nodes Architecture.

Considerations

On M10 or higher Atlas clusters running MongoDB v6.0 and

higher, you can configure Search Nodes separately from the database

nodes. Review the following before deploying Atlas Search nodes separately.

Cluster Tier

You can deploy Search Nodes for dedicated (M10 or higher)

clusters only. You can't add Search Nodes on free (M0) and

shared (M2 and M5) tier clusters. You can use the

Atlas UI and the Atlas Administration API to provision Search Nodes for

new and existing clusters on AWS, Google Cloud, or Azure.

Cloud Provider

You can host the Search Nodes on any cloud provider. You can't deploy Search Nodes separately for serverless instances or global clusters.

Cloud Provider Regions

Atlas deploys the Search Nodes in the same AWS, Google Cloud, or Azure regions as your electable, read-only, and analytics nodes.

Atlas supports deploying Search Nodes separately for workload isolation in any Google Cloud and Azure regions. However, you can't deploy Search Nodes in certain AWS regions. The following Atlas UI behavior applies:

If you select any of the following AWS regions for your cluster nodes first, Atlas disables the Search nodes for workload isolation toggle.

If you enable Search nodes for workload isolation first, Atlas disables the following in the dropdown for regions under Electable nodes for high availability.

Atlas doesn't support the following AWS regions for search nodes.

Region Name | AWS Region |

|---|---|

Paris | eu-west-3 |

Zurich | eu-central-2 |

Milan | eu-south-1 |

Spain | eu-south-2 |

UAE | me-central-1 |

Bahrain | me-south-1 |

Cape Town | af-south-1 |

Hong Kong | ap-east-1 |

Jakarta | ap-southeast-3 |

Melbourne | ap-south-4 |

Hyderabad | ap-south-2 |

To deploy Search Nodes separately, you must select a supported AWS, Google Cloud, or Azure region from the Electable nodes for high availability dropdown. To learn more about the supported regions, see Regions for Dedicated Search Nodes. Atlas automatically uses the same region for read-only and analytics nodes on your cluster. After deployment, you can't change the cloud provider or cloud provider region for your Atlas cluster.

Multi-Region and Multi-Cloud Clusters

Atlas supports deploying Search Nodes across multiple regions and cloud providers. When deploying Search Nodes for multi-region or multi-cloud clusters, consider the following:

Atlas deploys the same number of Search Nodes in each region.

All nodes across regions have the same search tier.

If you use the Atlas Administration API to add a new region to existing Search Nodes, Atlas deploys the same number of Search Nodes in the new region. However, if the new region does not support the current search tier, the request fails.

Search Tier

You can select a search tier for your Search Nodes in the Search Tier tab.

By default, Atlas deploys Search Nodes on

S20. You can select a higher tier for faster queries and more

complex aggregations, or a lower tier for smaller workloads. For some

tiers, you can also choose between low-CPU, which is recommended

for Atlas Vector Search, and high-CPU, which is optimized for Atlas Search.

For Search Nodes deployed on AWS, Atlas provides different search tiers in different regions. If the search tier that you selected is not available for your region, Atlas automatically deploys the search nodes in the next higher tier that is available in that region. To learn more, see AWS Search Tiers.

To learn more about search tiers for Search Nodes deployed on Google Cloud or Azure, see:

Add Search Nodes

To configure separate Search Nodes on your Atlas cluster, do the following:

Toggle Multi-Cloud, Multi-Region & Workload Isolation (M10+ clusters) to On.

Toggle Search nodes for workload isolation to On.

Specify the number of nodes to deploy. You can specify between a minimum of 2 and a maximum of 32 nodes.

After deployment, you can modify the cluster to add and remove any additional Search Nodes.

Note

For multi-region clusters, Atlas deploys the specified number of nodes in each region. To learn more, see Multi-Region and Multi-Cloud Clusters.

Click the checkbox to confirm that you understand and agree to the considerations for creating a cluster with Search Nodes.

Expand Cluster Tier to select a tier for your search nodes in the Search Tiers tab.

To learn more about the different tiers for your Search Nodes, see Search Tier.

To create a search node for a cluster using the Atlas CLI, run the following command:

atlas clusters search nodes create [options]

To learn more about the command syntax and parameters, see the Atlas CLI documentation for atlas clusters search nodes create.

Tip

See: Related Links

Note

You can add Search Nodes to existing Atlas cluster only if you currently don't have any and have never created Atlas Search indexes on your cluster.

To configure separate Search Nodes on your Atlas cluster,

send a POST request to the Atlas Search resource /deployment endpoint. You must specify the number of

nodes and the instance size. You can deploy between a minimum

of 2 and a maximum of 32 nodes. To learn more, see

Create Search Nodes.

When you add Search Nodes to a cluster that doesn't have any search

nodes, the existing mongot processes running alongside mongod

tail writes to the database that happen after Atlas Search completed the

initial sync and the mongot processes on the new dedicated search

nodes perform an initial sync on the required collections. This

results in dual reads during the migration process.

Remove Search Nodes

To remove some Search Nodes, adjust the Number of Search Nodes setting under Search nodes for workload isolation. You can deploy between 2 and 32 Search Nodes on your cluster.

To remove all Search Nodes on your Atlas cluster, do the following:

Toggle Search nodes for workload isolation to Off.

You can now select any region from the available cloud providers.

Click Remove to confirm in the Are you sure you want to remove Search Nodes? modal.

Click Review Changes.

Click the checkbox to confirm that you understand and agree to the considerations for deleting a cluster with Search Nodes.

There will be a brief interruption in processing your search query results.

Click Apply Changes.

To delete a search node for a cluster using the Atlas CLI, run the following command:

atlas clusters search nodes delete [options]

To learn more about the command syntax and parameters, see the Atlas CLI documentation for atlas clusters search nodes delete.

Tip

See: Related Links

To add or remove Search Nodes or modify search tier on your

Atlas cluster, send a PATCH request to the

Atlas Search resource

/deployment Update Search Nodes endpoint.

You can specify the following:

The number of nodes to remove. If omitted, Atlas doesn't change the current number of deployed nodes.

The instance size to use to switch to a different search tier. If omitted, Atlas doesn't modify the current search tier for the deployed nodes.

To learn more, see Update Search Nodes.

To remove all Search Nodes on your Atlas cluster,

send a DELETE request to the Atlas Search resource /deployment endpoint. To learn more, see

Delete Search Nodes.

If you delete all existing Search Nodes on your cluster, there will

be a brief interruption in processing your search query results while

Atlas migrates from mongot processes running separately on

dedicated Search Nodes to mongot processes running alongside

mongod. However, you won't experience any downtime while indexes

are migrated and no stale data.

Limitations

If you're connecting to a multi-cloud deployment through a private connection, you can access only the nodes in the same cloud provider that you're connecting from. This cloud provider might not have the primary node in its region. When this happens, you must specify the secondary read preference mode in the connection string to access the deployment.

If you need access to all nodes for your multi-cloud deployment from your current provider through a private connection, you must:

Configure a VPN in the current provider to each of the remaining providers.

Configure a private endpoint to Atlas for each of the remaining providers.