In the rapidly evolving field of artificial intelligence (AI), large language models (LLMs) have emerged as powerful tools that are changing the way we interact with technology, generate content, and perform various natural language processing (NLP) tasks. These large language models work to understand and manipulate human language with remarkable accuracy and sophistication.

Table of contents

- What is an LLM?

- The rise of large language models: a brief history

- So, what is a transformer model?

- How do large language models work?

- What is the difference between a large language model (LLM) and natural language processing (NLP)?

- Large language model examples in real-world applications

What is an LLM?

An LLM, or large language model, is a subset of natural language processing (NLP) models, representing a groundbreaking type of artificial intelligence (AI). These language models undergo extensive training on massive datasets of text and code, enabling them to excel across a wide range of tasks, from translating languages to crafting creative content and providing informative responses to your questions. LLMs have redefined our interaction with technology, making it akin to conversing with a highly proficient human.

The rise of large language models: a brief history

Systems that use natural language processing for conversational experiences have been around for a few decades, but they have only recently become powerful and sophisticated enough to be used for a wide range of tasks. One of the first conversational systems was developed in the 1960s with the creation of the first chatbot, ELIZA. However, it was very limited in its capabilities. It was not until the 2010s that chatbots matured to a level of functionality suitable for real-world applications.

A pivotal moment in LLM advancement arrived with the introduction of the transformer architecture in 2017. The transformer model significantly improved the understanding of word relationships within sentences, resulting in text generation that is both grammatically correct and semantically coherent.

In recent years, LLMs have been pre-trained on expansive datasets of hundreds of billions of text and code, leading to substantial improvements in their performance on a variety of tasks. For example, some LLMs are now able to generate text that is nearly indistinguishable from text written by humans.

So, what is a transformer model?

A transformer model is a pivotal advancement in the world of artificial intelligence and natural language processing. It represents a type of deep learning model that has played a transformative role in various language-related tasks. Transformers are designed to understand and generate human language by focusing on the relationships between words within sentences.

One of the defining features of transformer models is their utilization of a technique called "self-attention." This technique allows these models to process each word in a sentence while considering the context provided by other words in the same sentence. This contextual awareness is a significant departure from earlier language models and is a key reason for the success of transformers.

Transformer models have become the backbone of many modern large language models. By employing transformer models, developers and researchers have been able to create more sophisticated and contextually aware AI systems that interact with natural language in increasingly human-like ways, ultimately leading to significant improvements in user experiences and AI applications.

How do large language models work?

Large language models function by utilizing deep learning techniques to process and generate human language.

- Data collection: The first step in training LLMs involves collecting a massive dataset of text and code from the internet. This dataset comprises a wide range of human-written content, providing the LLMs with a diverse language foundation.

- Pre-training data: During the pre-training phase, LLMs are exposed to this vast dataset. They learn to predict the next word in a sentence, which helps them understand the statistical relationships between words and phrases. This process enables them to grasp grammar, syntax, and even some contextual understanding.

- Fine-tuning data: Following pre-training, LLMs are fine-tuned for specific tasks. This involves exposing them to a narrower dataset related to the desired application, such as translation, sentiment analysis, or text generation. Fine-tuning refines their ability to perform these tasks effectively.

- Contextual understanding: LLMs consider the words before and after a given word in a sentence, allowing them to generate coherent and contextually relevant text. This contextual awareness is what sets LLMs apart from earlier language models.

- Task adaptation: Thanks to fine-tuning, LLMs can adapt to a broad array of tasks. They can answer questions, generate human-like text, translate languages, summarize documents, and more. This adaptability is one of the key strengths of LLMs.

- Deployment: Once trained, LLMs can be deployed in various applications and systems. They power chatbots, content generation engines, search engines, and other AI applications, enhancing user experiences.

In summary, LLMs work by first learning the intricacies of human language through pre-training on massive datasets. They then fine-tune their abilities for specific tasks, leveraging contextual understanding. This adaptability makes them versatile tools for a wide range of natural language processing applications.

Additionally, it's important to note that the selection of a specific LLM for your use case — as well as the processes of pre-training the model, fine-tuning, and other customizations — happen independently of Atlas (and thereby, outside of Atlas Vector Search).

What is the difference between a large language model (LLM) and natural language processing (NLP)?

Natural language processing (NLP) stands as a domain within computer science dedicated to facilitating interactions between computers and human languages, encompassing both spoken and written communication. Its scope encompasses empowering computers with the ability to understand, interpret, and manipulate human language, spanning applications such as machine translation, speech recognition, text summarization, and question answering.

On the other hand, large language models (LLMs) emerge as a specific category of NLP models. These models undergo rigorous training on vast repositories of text and code, enabling them to discern intricate statistical relationships between words and phrases. Consequently, LLMs exhibit the capacity to generate text that is both coherent and contextually relevant. LLMs can be used for a variety of tasks, including text generation, translation, and question-answering.

Large language model examples in real-world applications

Enhanced customer service

Imagine a company seeking to elevate its customer service experience. They harness the capabilities of a large language model to create a chatbot capable of addressing customer inquiries about their products and services. This chatbot undergoes a training process using extensive datasets consisting of customer questions, corresponding answers, and detailed product documentation. What sets this chatbot apart is its deep understanding of customer intent, enabling it to provide precise and informative responses.

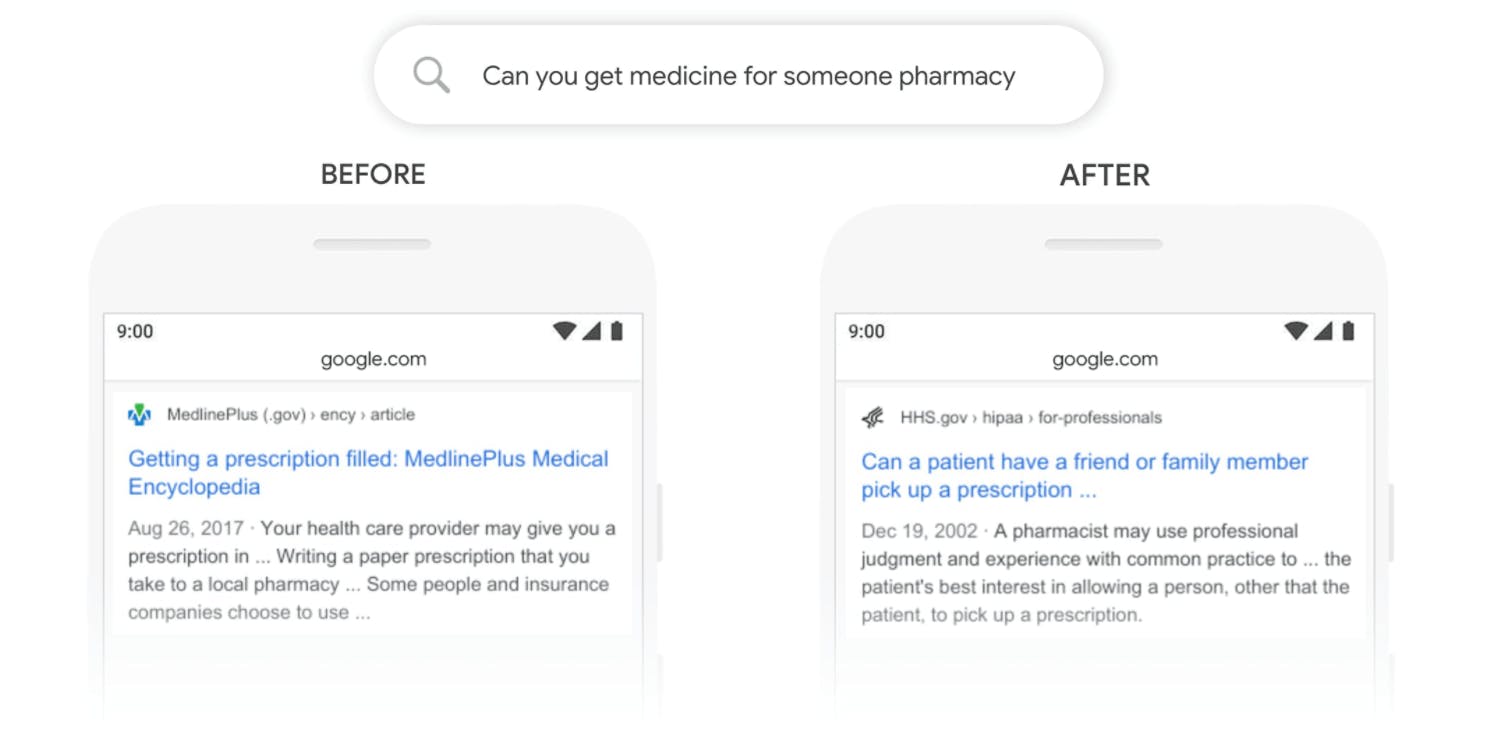

Smarter search engines

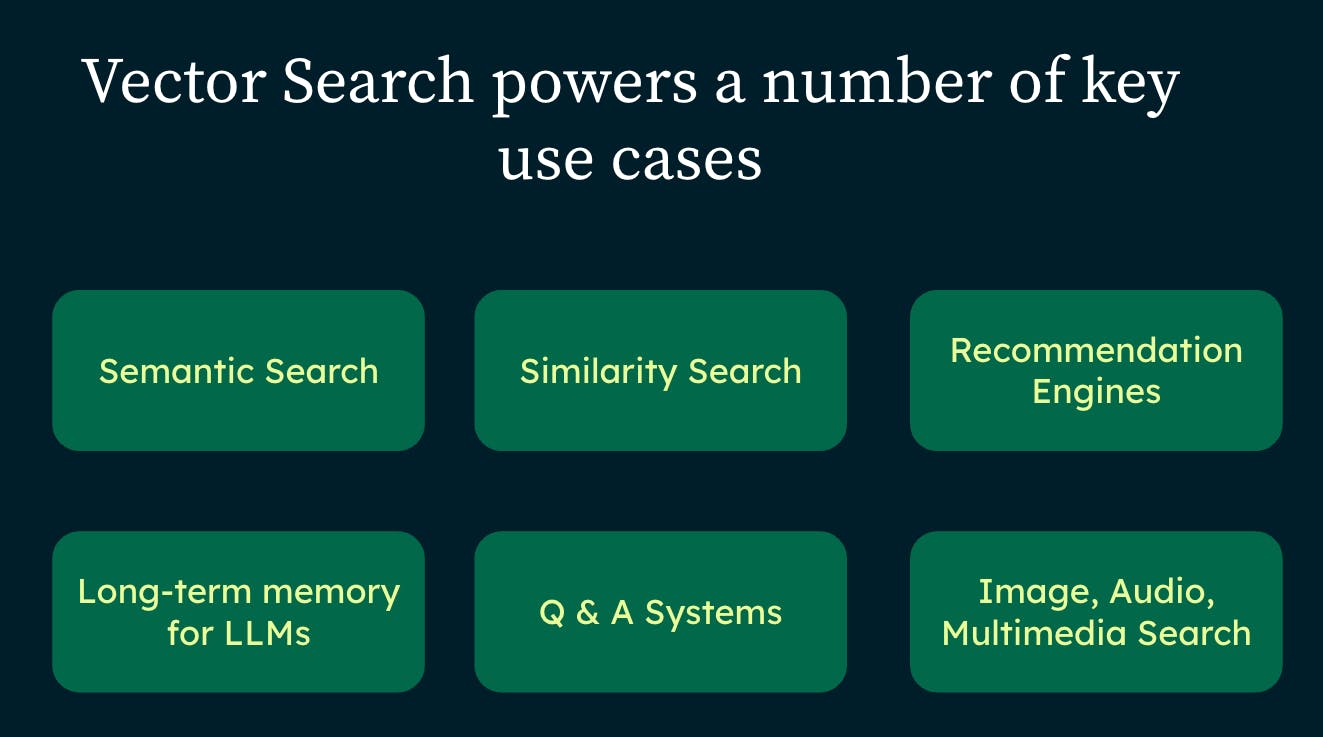

Search engines are a part of our daily lives, and LLMs power these search engines, making them more intuitive. These models can understand what you're searching for, even if you don't phrase it perfectly, and retrieve the most relevant results from vast databases, enhancing your online search experience.

Personalized recommendations

When you shop online or watch videos on streaming platforms, you often see recommendations for products or content you might like. LLMs drive these smart recommendations, analyzing your past behavior to suggest things that match your tastes, making your online experiences more tailored and personalized to you.

Creative content generation

LLMs are not just data processors; they're also creative minds. They have deep learning algorithms that can generate content from blog posts to product descriptions and even poetry. This not only saves time but also aids businesses in crafting engaging content for their audiences.

By incorporating LLMs, businesses are improving their customer interactions, search functionality, product recommendations, and content creation, ultimately transforming the tech landscape.

Types of large language models

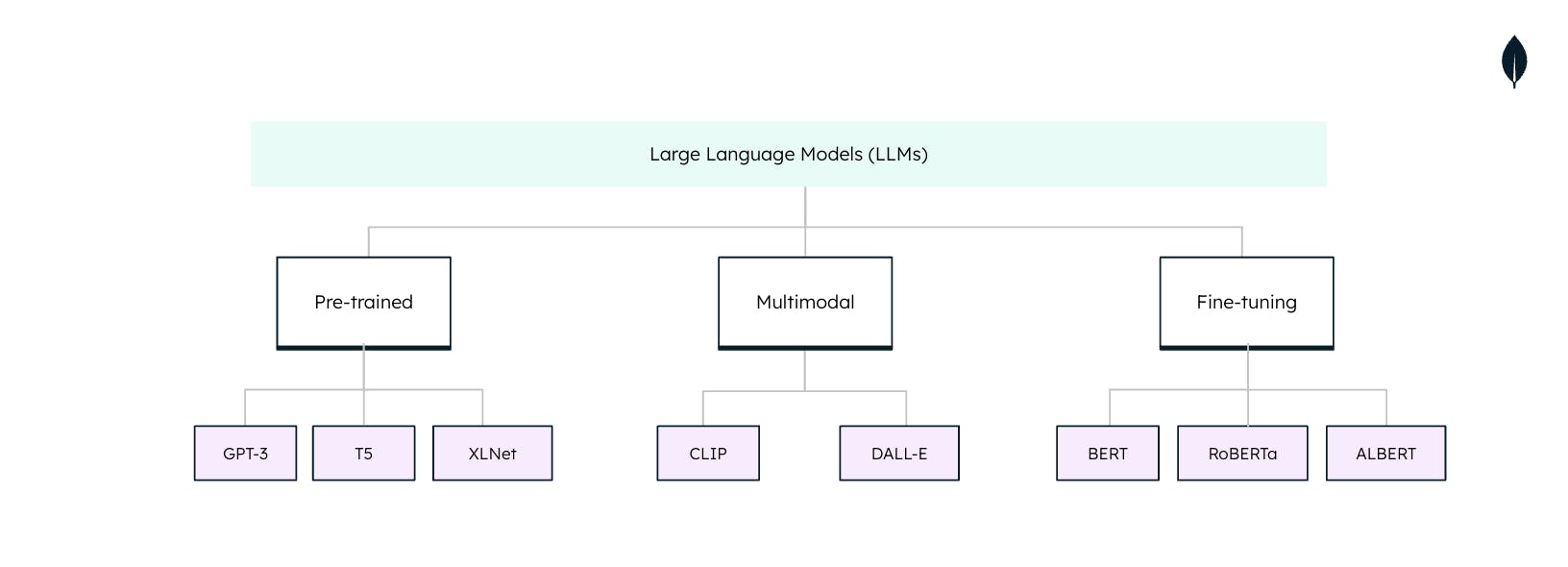

Large language models (LLMs) are not one-size-fits-all when used in natural language processing (NLP) tasks. Each LLM is tailored to specific tasks and applications. Understanding these types is essential in harnessing the full potential of LLMs: